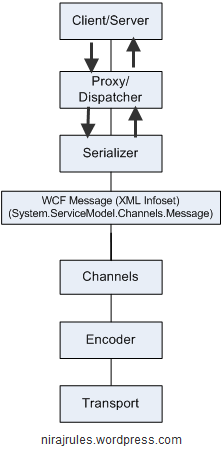

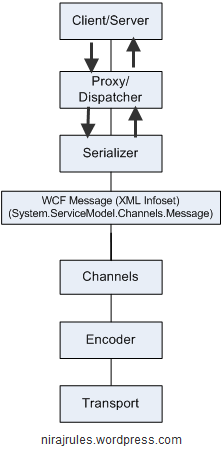

I talked about encodings in a previous blog post, in this one I will talk about Serializers. Have a look at the below diagram which depicts WCF architecture in its simplest form.

As you can see serializers convert a .NET Object to WCF Message (XML Infoset) whereas Encoder convert that WCF Message into byte stream. Serializers are governed by Service Contracts whereas Encoders are specified through endpoint’s binding. There are 3 serializers supported by WCF – XmlSerializer, DataContractSerializer & NetDataContractSerializer. DataContractSerializer is the default one and should be used always unless there are backward compatibilities required with ASMX / Remoting. Let’s explore each of serializers in turn.

XmlSerializer, I guess most of us are familiar with it coming from ASMX world, is an opt-out serializer. By default this serializer takes only public fields, properties of a given type & sends them over wire. Any sensitive data must be explicitly opted out using XmlIgnore attribute. The advantage of XmlSerializer is the amount of flexibility it gives in controlling layout of XML Infoset (schema driven), and this sometimes might be required due to compatibility requirements with existing clients. Choosing XmlSerializer over default DataContractSerializer is quite easy, just use XmlSerializerFormat attribute along with your ServiceContract or OperationContract.

[ServiceContract]

//[XmlSerializerFormat] – Could be applied here for all contracts

interface IBank

{

[XmlSerializerFormat]

[OperationContract]

Customer GetCustomerById(int id);

}

DataContractSerializer – When to moving to WCF world, Microsoft seems to have decided to give more focus on versioning contracts then creating contracts. DataContractSerializer which is default serializer doesn’t allow us the flexibility of XmlSerializer for layout of XML Infoset (though you can still serialize a type implementing IXmlSerializable), but provides good versioning support with help of Order, IsRequired attributes & IExentsibleDataObject interface. Also there is a myth that DataContractSerializer only supports types decorated with DataContract/DataMember & Serializable attributes in reality though there is a programming model supporting a range of types outlined here including Hashtables, Dictionary, IXmlSerializable, ISerializable and POCO’s. There is an attribute DataContractFormat which can allow a mix with XmlSerializer. Note that if you don’t apply any attributes at all, DataContractFormat is applied by default.

[ServiceContract]

[XmlSerializerFormat] //Serialize everything using XmlSerializer

interface IBank : IExtensibleDataObject

{

[DataContractFormat] //Override with DataContractSerializer

[OperationContract]

Customer GetCustomerById(int id);

}

Finally NetDataContractSerializer. To many this is a distant concept. Let me try explaning this with a example:

[ServiceContract]

public interface IAdd /*AddService is the implementation class*/

{

[OperationContract]

int Add(int i, int j);

ISub Sub { [OperationContract] get; } /* return new SubService() */

}

[ServiceContract]

public interface ISub /*SubService is the implementation class*/

{

[OperationContract]

int Sub(int i, int j);

}

The problem with above is that it won’t work with either of serializers, why? WCF only shares contracts not types and here you are trying to send a type ‘SubService’ back. To make this work with DataContractSerializer we need to use KnownType or ServiceKnownType attribute:

[ServiceContract]

[ServiceKnownType(typeof(SubService))]

public interface IAdd { …

Or you can use NetDataContractSerializer. WCF team discourages use of NetDataContractSerializer and hence there are no straight attributes to apply it, luckily it’s quite easy to create one as shown here. Hence with NetDataContractSerializer there is no need to declare the sub types, actual type information travels over the wire & same will be automatically loaded.

[ServiceContract]

public interface IAdd /*AddService is the implementation class*/

{

[OperationContract]

int Add(int i, int j);

ISub Sub { [NetDataContractFormat][OperationContract] get; }

}

Note that both of the above approaches require that your implementation assembly is present on client side (there is no mapping for MarshalByRefObject of .NET Remoting in WCF).

Few more items you should care about WCF Serialization, DataContractSerializer in particular, all of which you can control through DataContractSerializer’s constructor:

1) maxItemsInObjectGraph which controls maximum items your object graph can have. You can also control it through behaviors in configuration file or Service behavior attribute (you don’t have attributes for endpoint behaviors as they are not mapped to static programming constructs, so you will have to wire maxItems explicitly by code or through configuration on client side) – default is 65536

<behaviors>

<serviceBehaviors>

<behavior name=”largeObjectGraph”>

<dataContractSerializer maxItemsInObjectGraph=”100000″/>

</behavior>

</serviceBehaviors>

<endpointBehaviors>

<behavior name=”largeObjectGraph”>

<dataContractSerializer maxItemsInObjectGraph=”100000″/>

</behavior>

</endpointBehaviors>

</behaviors>

2) preserveObjectReferences which help you deal with circular references like Person having Children or bidirectional references between Order and line items.

3) IDataContractSurrogate which I would let you figure out yourself (post has already crossed my normal word limit 🙂 – MSDN link here).

Look forward to read your experiences with WCF Serializers.